Making slow queries fast using composite indexes in MySQL

This post expects some basic knowledge of SQL. Examples were made using MySQL 5.7.18 and run on my mid 2014 Macbook Pro. Query execution times are based on multiple executions so index caching can kick in. The use-case came from a real application and the solution is used in production.

So you have inserted preliminary data to your database and run a simple COUNT(*) query against it with a simple WHERE clause and… the spinner is still running… you check that your MySQL client hasn’t crashed… it hasn’t… you look at your empty coffee cup and expect the worst… 9 long seconds of your workday pass before the results come in. What now? You need this data, even more importantly, this is the core data of your business and you have just run your most simplest query which took NINE SECONDS!?!? Can this be it? Will your startup end here? Because of an slow SQL query? You thought MySQL was supposed to handle millions of rows easily but even your simple query takes whopping 9 seconds?

During this post we’ll look at the following things:

- How to define which indexes to create based on the queries you run?

- How to check if your query is / is not using given indexes (using EXPLAIN)?

- How to help SQL choose the correct indexes?

- How much does this really help?

The general idea of the post is to help you to find and solve these kinds of problems with different kind of queries all around your application. Even though we’ll use an example use-case, the solution is not restricted to given dataset or schemas and can be used on a wide range of different queries.

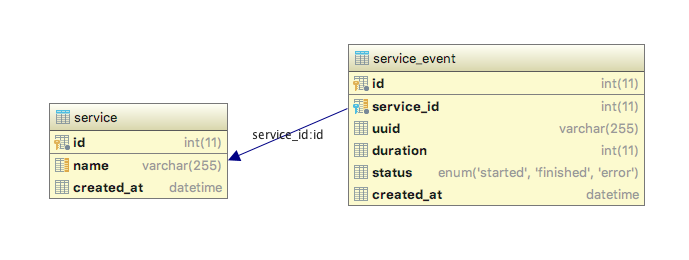

Firstly, let’s lay down our example use-case. We have services, and these services do something with our API and our API logs the events into services_events table. It’s a simple table, it holds the service_id (fk key to service table), uuid, duration of the event, event status and when the event took place as datetime. The table has a bunch of rows, in the example we’re going to use dataset with around ~781k rows.

And the goal is to have a monitoring page with error count and amount of “slow” events. Events are considered slow when their duration exceeds >1s.

Rough schema (note that this is the bare minimum schema we’ll need for testing. In a real application you’ll have a lot more information available) so you have an idea how the DB might look like:

You can also download the schema + data from here (you need to create the database yourself, dump only has tables and data, already includes indexes created in this post also):

https://www.dropbox.com/s/fhphk1s4kvz9u3l/index-testing-test-data-22072018.sql?dl=1

As you can see, we currently have 3 indexes. Both tables have ID column as a primary key, which is indexed by default and service_event table has a foreign key column service_id which is also indexed.

This is our test data (note that for testing purposes I’m using a smaller dataset to keep myself sane, the time to run the non-indexes example queries will grow quickly when service_events table gets big), we have a total of 42 services with ~781k events:

Now, lets lay down basic queries we would like to run for the monitoring page:

As you can see both of these queries take around 1.6s to complete even though their results are cardinally different. This is because MySQL goes through the exact same amount of data for both of these queries. We can investigate this by using EXPLAIN:

MySQL reports that for both of these queries, it’ll go through 777896 rows and use the service_events_service_id_fk index.

Now let’s talk about where would you start if you want to index your table(s). Some people just like to create indexes for columns that they know are often used in searches (name, email etc). But good indexes serve the queries you run. Of course, when creating schemas you might not yet know which queries you’ll run, so it’s still a good idea to create basic indexes, but do not just index all fields in every table, as indexes use up memory and have a performance hit on your insert/update/delete queries. So creating a bunch of indexes you are actually not using will be bad for the performance of your database. It is also a good practice to visit your index list from time to time in the application development flow. So you can remove the indexes you are not using or add ones that’ll speed up your queries.

In our example, we already know which queries are slow, so we’ll use these as our base information for adding indexes. In the above queries, the WHERE clauses use 2 columns each (3 different columns in total). These columns are: status, duration, and created_at. So let’s just add basic, one field indexes for these columns and then run our queries again to see how the EXPLAIN output and execution time changes:

So status query sped up considerably, about 10 times, but duration query remained slow. One thing to take away from this is that the fact you have all your columns used in WHERE clauses indexes, doesn’t mean the queries magically all become fast. You can also see for both of the EXPLAIN outputs that possible_keys list now has the newly added keys, this is good, MySQL knows the keys are there and is able to use them, but does it? For the status query, it reports that it uses the status_index, good. Basically, it means that it used the index to get the list of rows that have the status we ask for, and then it uses regular where to figure out which of these rows were added in the last week. This also means that by the time it does the created_at match, it will only have the rows with status ‘error’, so the dataset it needs to go through is considerably smaller than in the original query.

Duration query is not doing so good, we can see MySQL picked the created_at index which we added, but the query itself still takes around 1.6s. So we are stuck again, we added indexes for all the columns used in the WHERE clause but the query is still slow, now what?

Let’s get into multi-column indexes and create indexes that’ll directly serve the queries we run. So we’ll add 2 new indexes, one for each of the queries. First one would be status + created_at and the second one would be duration + created_at.

Status query is now 24ms, which is ~65 times faster than in the start. 24ms is an execution time I can be happy while having ~780k rows. But duration query is still not doing so good, we can see the possible_keys list has the composite index we added, but for some reason it is not using it.

This is where index hints (USE INDEX, FORCE INDEX) come in. With these, we can help the MySQL optimiser to make the right decision while choosing an index. Please note, however, that using these should be the last resort and only done so on static queries. If you add these for queries which WHERE clauses can change (in terms of columns used) you can drastically worsen the performance of the queries by not letting optimiser do its job. In our case we know that the columns used in the WHERE clause will not change, so let’s try using index hints:

As you can see, using index hints can further increase the query speed. The difference in USE INDEX And FORCE INDEX comes from (Quote from MySQL documentation):

The

FORCE INDEXhint acts likeUSE INDEX (index_list), with the addition that a table scan is assumed to be very expensive. In other words, a table scan is used only if there is no way to use one of the named indexes to find rows in the table.

And that’s it. We’ve tuned the execution time down to a manageable level for both of these queries just by using indexes.

Bonus #1: I doubled the dataset size and ran all the queries again to see how they would perform:

As you can see, even with 1.5 million rows the final query speeds are in an acceptable range.

Bonus 2#: You’ll most likely want to see the service name and search by it as well, this can be done easily by adding a JOIN statement with multiple ON clauses.

Even though we’re now also taking data from another table, the execution time is still acceptable. This example is run with composite index in place in service_event table, but service table doesn’t have an index for name column, so adding that will further speed this query up (though service table is currently very small and adding index to it will have minimal benefit with this data).

TL;TR:

- Use EXPLAIN to figure out if and what indexes your queries are currently using

- Create composite indexes based on the actual WHERE clauses of your queries

- Use index hints (USE INDEX, FORCE INDEX) if necessary to help MySQL optimiser to choose correct index